Unlocking the Power of Trial and Error: Reinforcement Learning Paves the Way for Solving Complex Problems in Robotics, Finance, Healthcare and More!

Exploring the Components and Key Steps in Reinforcement Learning for Optimal Decision-Making.

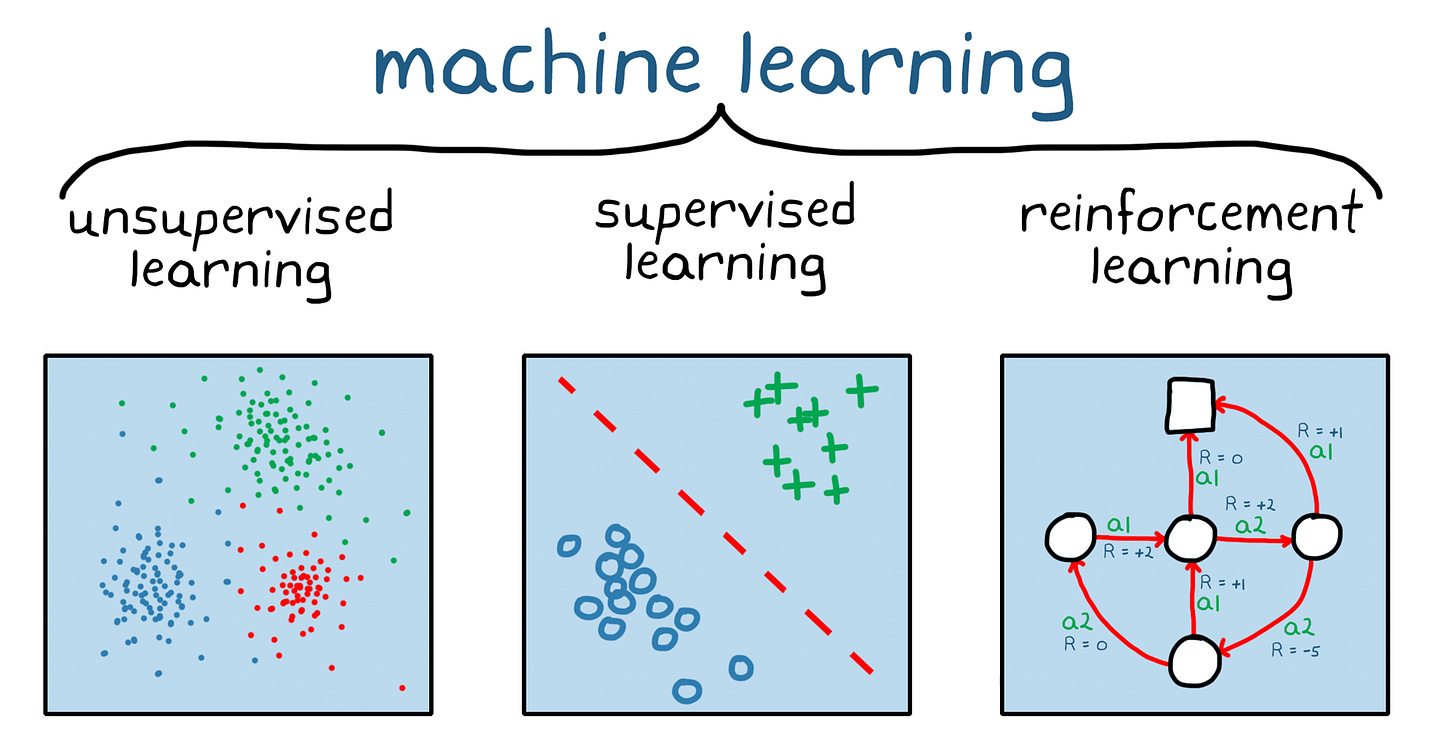

Reinforcement Learning (RL) is a subfield of machine learning that involves developing algorithms and models that enable agents to learn by interacting with an environment. It is based on the idea that an agent can improve its behaviour through trial and error by receiving feedback from the environment in the form of rewards or punishments.

The goal is to learn a policy that maximises the cumulative reward it receives over time.

Reinforcement Learning algorithms are designed to enable the agent to learn from experience, and they are particularly useful in scenarios where the optimal solution is difficult to determine.

It has been successfully applied in various domains such as robotics, game playing, finance, and healthcare. It has shown great promise in solving complex problems that were previously considered unsolvable.

Components of Reinforcement Learning

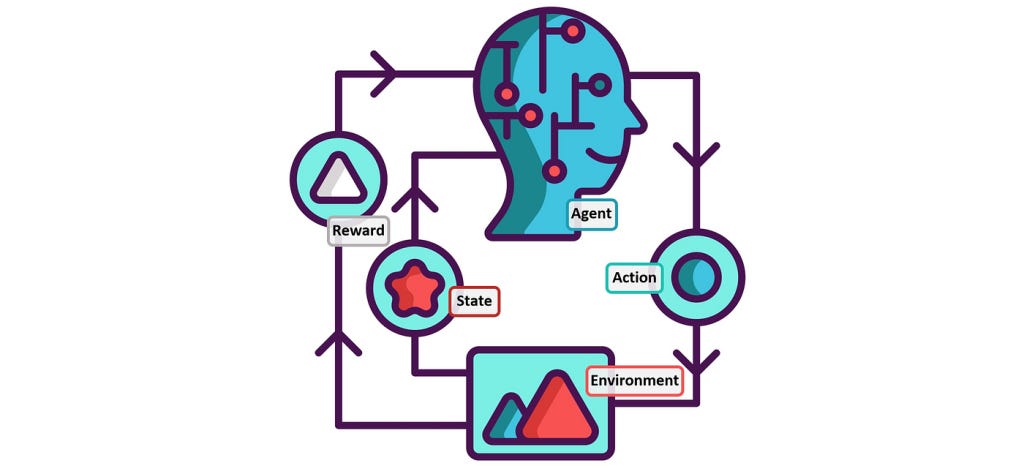

The Reinforcement Learning framework comprises four essential components: the agent, the environment, actions, and rewards. The agent is the decision-maker, and its goal is to maximise the cumulative reward it receives from the environment over time. The environment is the external context in which the agent operates, and it provides feedback to the agent in the form of rewards. Actions are the decisions made by the agent in response to the environment, and they determine the agent's future state. Finally, rewards are the signals that indicate whether the agent's actions are correct or not.

But other essential steps came into consideration along with these four, and they are:

Agent: An agent is an entity that interacts with the environment to achieve a specific goal. The agent receives observations from the environment and takes actions based on those observations. The actions taken by the agent affect the state of the environment, and the environment provides feedback in the form of rewards or punishments.

Environment: The environment is the external world that the agent interacts with. It includes all the states, actions, and rewards that the agent encounters during its interaction with the world. The environment can be deterministic or stochastic, depending on whether the next state is fully determined by the current state and action or not.

State: The state of the environment is a representation of the current situation. It includes all the relevant information that the agent needs to make decisions. The state can be fully observable or partially observable, depending on whether the agent has access to all the relevant information or not.

Action: An action is a decision made by the agent based on the current state. The agent takes action to influence the environment and achieve its goal. The action can be discrete or continuous, depending on whether the agent can choose from a finite set of actions or not.

Reward: A reward is a feedback signal from the environment that indicates how well the agent is performing. The agent's goal is to maximise the cumulative reward it receives over time. The reward can be positive, negative, or zero, depending on whether the agent is doing well, poorly, or indifferent, respectively.

Policy: A policy is a mapping from states to actions. It defines how the agent should behave in each state to maximise its expected cumulative reward. The policy can be deterministic or stochastic, depending on whether the action is chosen with certainty or not.

Value Function: A value function is a mapping from states or state-action pairs to expected cumulative rewards. It estimates the expected reward that the agent will receive starting from a given state or state-action pair and following a particular policy. The value function is used to evaluate and improve the policy.

Model: A model is a representation of the environment's dynamics. It predicts the next state and reward given the current state and action. The model can be used for planning and simulation.

Exploration vs. Exploitation: Exploration refers to the process of trying out different actions to discover the best possible policy. Exploitation refers to the process of taking actions that are known to be good based on the current policy. RL algorithms must balance exploration and exploitation to avoid getting stuck in local optima.

Types of Reinforcement Learning Algorithms

There are two main types of Reinforcement Learning algorithms: model-based and model-free.

Model-based algorithms learn a model of the environment, which can be used to make predictions about future states and rewards.

Model-free algorithms, on the other hand, do not learn a model of the environment and instead learn directly from experience. It, on the other hand, does not require an explicit model of the domain. Instead, they rely on trial and error to learn the optimal policy for the agent.

These models can be either deterministic or stochastic and are used to predict the outcome of different actions. Another way to classify Reinforcement Learning algorithms is based on the type of learning they use.

There are two main types of learning: value-based and policy-based.

Value-based algorithms learn a value function, which estimates the expected reward from a given state or action.

Policy-based algorithms learn the optimal policy directly without the need for a value function.

Finally, actor-critic algorithms combine value-based and policy-based methods, leveraging both strengths.

Reinforcement Learning (RL) can also be broadly classified into three categories based on the type of feedback given to the agent:

Positive Reinforcement: In positive reinforcement, the agent receives a positive reward when it performs the desired action. This reward serves as a signal to the agent that it has taken the correct action and should continue to take similar actions in the future.

Negative Reinforcement: In negative reinforcement, the agent receives a negative reward when it performs an incorrect action. This penalty serves as a signal to the agent that it has taken a wrong action and it should avoid taking similar actions in the future.

Punishment: In punishment, the agent receives a negative reward when it performs an incorrect action. Unlike negative reinforcement, the negative reward in punishment is much larger, and it serves as a signal to the agent that it should never retake the incorrect action.

These types of reinforcement can be further broken down into other categories based on the feedback mechanism used, such as temporal difference (TD) learning, Q-learning, and policy gradients.

Deep Reinforcement Learning

Deep reinforcement learning is a subfield of machine learning that combines deep neural networks with reinforcement learning algorithms to enable an agent to learn to perform a task through trial and error.

In traditional reinforcement learning, an agent learns to take actions in an environment to maximise a reward signal. In deep reinforcement learning, the agent is typically represented by a deep neural network, which can learn a more complex mapping between the input observations and actions. This approach has been successfully applied to a wide range of tasks, such as playing complex board games like Go or Chess, controlling robotic arms, and playing video games.

The basic structure of a deep reinforcement learning algorithm typically involves an agent that interacts with an environment by taking actions based on observations and receiving rewards. The agent's goal is to learn a policy that maximises the expected cumulative reward over time. This is achieved by using a deep neural network to approximate the Q-function, which is the expected cumulative reward for taking a particular action in a given state. The network is trained using a variant of the backpropagation algorithm called Q-learning, which updates the network weights based on the temporal difference error between the predicted Q-values and the actual rewards received.

While deep reinforcement learning has shown great success in many domains, it can also be challenging to train due to issues such as sample inefficiency, instability, and exploration-exploitation trade-offs. However, ongoing research is addressing these challenges and pushing the boundaries of what is possible with deep reinforcement learning.

Applications of Reinforcement Learning

Reinforcement learning has been successfully applied in a variety of domains, including robotics, games, and recommender systems. In robotics, reinforcement learning has been used to train robots to perform complex tasks such as grasping objects and navigating through the environment. In gaming, reinforcement learning has been used to train agents to play games such as chess and Go at superhuman levels. One of the most famous applications of reinforcement learning is the game Go, where Google's AlphaGo defeated world champion Lee Sedol in 2016. Reinforcement Learning has also been used to teach robots to walk. Researchers at OpenAI have shown that their algorithm can learn to walk, run and even climb stairs.

Another exciting application of reinforcement learning can be found in the field of autonomous vehicles. Reinforcement learning algorithms can be used to optimise the decision-making process of self-driving cars to ensure that they make safe and efficient decisions on the road.

In recommender systems, reinforcement learning has been used to personalise recommendations for individual users based on their past behaviour.

Challenges of Reinforcement Learning

There are several challenges in reinforcement learning that researchers and practitioners are actively working to address.

Here are some of the major challenges:

Exploration-Exploitation Trade-off: In reinforcement learning, an agent must explore the environment to discover new actions that lead to higher rewards, while also exploiting the knowledge it has gained to maximise its reward. Balancing exploration and exploitation can be challenging, as too much exploration can lead to suboptimal performance, while too much exploitation can lead to the agent getting stuck in local optima.

Credit Assignment: Credit assignment refers to the challenge of correctly attributing a reward to a specific action or sequence of actions taken by the agent. This is particularly challenging when there is a delay between the action and the reward, or when multiple actions contribute to the final reward.

Sparse Rewards: Reinforcement learning algorithms rely on rewards to learn, but sometimes rewards can be sparse or delayed, which makes it challenging for the agent to learn the optimal policy.

Function Approximation: In many reinforcement learning applications, the state and action spaces are large or continuous, which makes it challenging to represent them using discrete tables. In such cases, function approximation techniques like neural networks are used to approximate the optimal policy, but they can be prone to overfitting and instability.

Generalisation: Reinforcement learning agents should be able to generalise their learning to new environments or tasks. However, this is challenging when the agent has only been trained on a limited set of tasks or when the test environment is different from the training environment.

Safety: Reinforcement learning agents can learn to take actions that have unintended or undesirable consequences, especially in complex or dynamic environments. Ensuring the safety of the agent and its interactions with the environment is an important challenge in reinforcement learning.

Scalability: Reinforcement learning algorithms can be computationally expensive, especially when the state and action spaces are large or the environment is complex. Developing scalable reinforcement learning algorithms that can learn efficiently in large-scale environments is an active area of research.

Conclusion

Reinforcement learning is a powerful technique that has been successfully applied in a wide range of domains. Reinforcement Learning algorithms consist of three main components: the agent, the environment, and the reward function. There are two main types of Reinforcement Learning algorithms: model-based and model-free, and two main types of learning: value-based and policy-based.

By using Reinforcement Learning, we can teach machines to make optimal decisions in scenarios where the best solution is difficult to determine. As Reinforcement Learning algorithms continue to improve, we can expect to see more and more applications of this technology in a wide range of industries.

Despite its successes, Reinforcement Learning still faces several challenges, including the exploration-exploitation tradeoff and the credit assignment problem.

This article was originally published on the company weblog. You can read the original one here.

Intellicy is a consultancy firm specialising in artificial intelligence solutions for organisations seeking to unlock the full potential of their data. They provide a full suite of services, from data engineering and AI consulting to comment moderation and sentiment analysis. Intellicy’s team of experts work closely with clients to identify and measure key performance indicators (KPIs) that matter most to their business, ensuring that their solutions generate tangible results. They offer cross-industry expertise and an agile delivery framework that enables them to deliver results quickly and efficiently, often in weeks rather than months. Ultimately, Intellicy helps large enterprises transform their data operations and drive business growth through artificial intelligence and machine learning.