The Importance of Model Monitoring in Machine Learning: Techniques and Best Practices

How to Ensure Your Machine Learning Models Perform as Expected and Mitigate Potential Problems

Machine learning models have revolutionised the way we solve complex problems. With their ability to learn from data and make predictions, they have found applications in various fields such as healthcare, finance, marketing, and others.

However, as models become more complex, monitoring them becomes increasingly difficult. But, Monitoring machine learning models is critical to ensure they are performing as expected and not drifting from their intended behaviour.

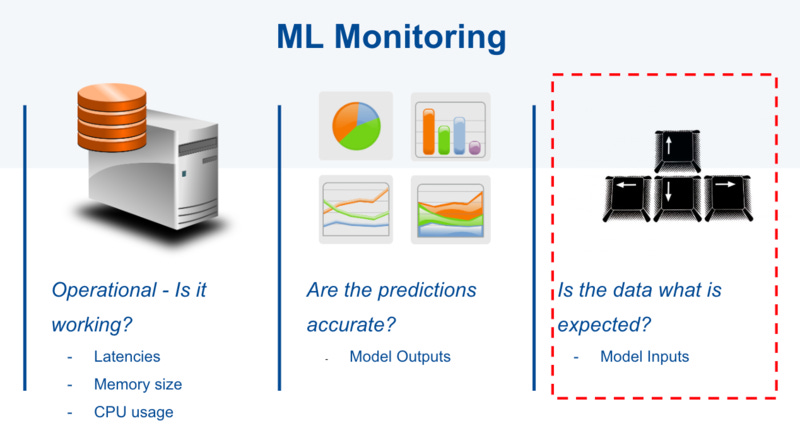

What does Model Monitoring mean?

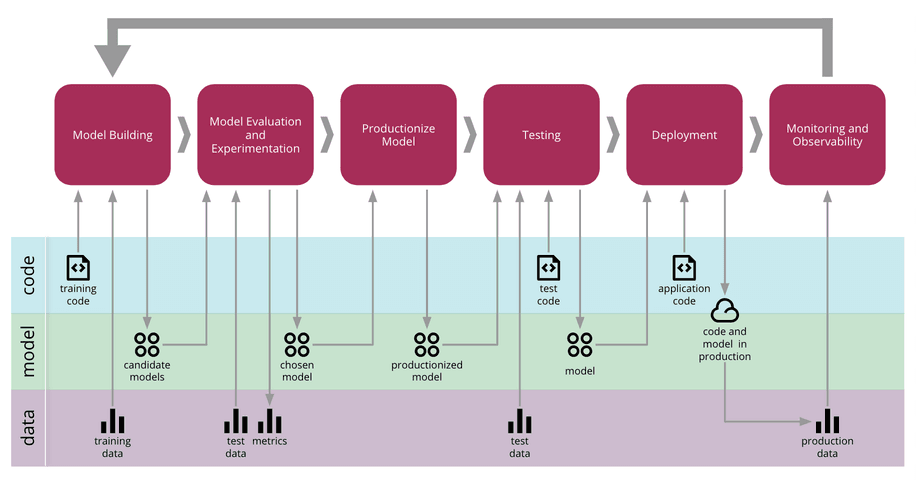

Model monitoring involves tracking the performance of a machine learning model over time to ensure that it is working as expected. Model monitoring aims to detect anomalies or changes in the model's behaviour, such as a degradation in accuracy, a deviation from prediction, or a bias. This helps identify and fix problems before they impact the business or user experience.

Why is Model Monitoring Important?

Model monitoring is essential because it helps identify and mitigate potential problems with model performance. Some of the reasons why model monitoring is essential are:

Performance degradation: machine learning models can degrade over time due to changes in data distribution, system updates, or other external factors. If this is not monitored, it can lead to inaccurate predictions and unreliable results.

Prediction drift: Prediction drift occurs when the model's predictions deviate from their expected values. This can occur due to changes in data patterns, new data sources, or other factors. If not detected early, prediction drift can lead to significant errors and affect the model's credibility.

Bias and fairness: machine learning models may exhibit bias against certain groups, leading to unfair or discriminatory results. Model monitoring can help identify and mitigate such biases to ensure the fairness of model predictions.

Compliance: Model monitoring is important to ensure compliance with regulatory requirements such as GDPR, HIPAA, and other privacy laws. It helps detect and prevent violations of these regulations.

Tools and Techniques for Model Monitoring

Various techniques are used for model monitoring, depending on the model type, the data, and the use case. Some of the commonly used techniques are:

Statistical monitoring: statistical monitoring includes monitoring of model performance metrics such as accuracy, precision, recall, F1 score, and others. Statistical process control (SPC) charts can be used to track these metrics over time and detect anomalies.

Data drift monitoring: Data drift monitoring is about tracking changes in data distribution over time. This can be done by comparing the distribution of the training data to the distribution of the data used for the predictions. If there is a significant difference between the two, this may indicate data drift.

Concept drift monitoring: Concept drift monitoring involves tracking changes in underlying concepts or relationships between variables in the data. This can be done by comparing the performance of the model on new data to the performance of the original data.

Model Explainability: Model explainability techniques help to understand how the model makes predictions. Techniques such as SHAP values, LIME, or feature importance rankings can do this. This can help identify any biases or unfairness in the model's predictions.

Model performance testing: model performance testing involves testing the model's performance in various scenarios such as stress tests, edge cases, and adversarial attacks. This can help identify weaknesses or vulnerabilities in the model's predictions.

Automated alerting: Automated alerting involves setting up alerts to notify the team when the model's performance metrics or data distributions deviate from expected values. This can help identify and fix issues before they impact the business or user experience.

Best practices for machine learning model monitoring

There are several best practices for machine learning model monitoring. These include:

Define metrics: Before monitoring a model, defining the metrics used to evaluate its performance is crucial. This includes metrics such as accuracy, precision, recall, and F1 score, as well as any other metrics relevant to the application.

Establish a baseline: establishing a baseline for the model's performance is critical for detecting changes in performance. This should be done during the model development phase and can be used as a reference point for future monitoring.

Monitor input data: Monitoring the input data that the model receives is critical to detecting changes in the data distribution. If the input data changes significantly, the model's performance may degrade, and it may need to be re-trained.

Monitor output data: Monitoring the output data that the model produces is critical for detecting unexpected results or errors. This can be done by comparing the model's predictions to ground truth labels or by other anomaly detection methods.

Use visualisation tools: Visualization tools can help you monitor the model's performance and detect changes in real time. This can help identify problems before they become serious issues.

Implement alerts: Implementing alerts is important to notify stakeholders when the model's performance falls below a certain threshold. This can include email alerts, SMS notifications, or other notification methods.

If the model's performance degrades significantly, it may need to be retrained using new data or a different algorithm.

Retraining the model can help improve its performance and prevent future problems.

Approaches for Model Retraining

Machine learning models are designed to learn from data and make predictions based on that data. However, as the data changes, the models become less accurate or ineffective at making predictions. Organizations need to retrain the model using new data or a different algorithm to address this issue. Some of the approaches for model retraining are given below:

Incremental Learning: Incremental learning is a technique that updates the machine learning model in real-time as new data becomes available. This approach involves training the model on new data as it arrives and then combining the new model with the existing model. The advantage of this approach is that the model will be continuously updated without requiring a complete retraining model.

Batch Learning: Batch learning involves periodically retraining the machine learning model using a batch of new data. This approach involves collecting a large batch of new data and then training the model on that data. The advantage of this approach is that it allows for more comprehensive updates to the model, which helps improve its accuracy and effectiveness.

Transfer Learning: Transfer learning involves using a pre-trained model as a starting point for a new model. This approach involves reusing the knowledge and skills learned by the pre-trained model to train the new model on a smaller dataset. The advantage of this approach is that it allows for faster training times and results in more accurate models.

Fine-Tuning: Fine-tuning involves modifying a pre-trained model to fit a new dataset better. This approach involves training the model on a new dataset and adjusting its parameters to improve its accuracy. The advantage of this approach is that it results in more accurate models with fewer training iterations.

Hybrid Approach: A hybrid approach combines multiple approaches to retrain the machine learning model.

For example, an organization uses incremental learning to update the model in real-time and then uses batch learning to periodically update the model using a large batch of new data. The advantage of this approach is that it allows for more comprehensive updates to the model while still providing real-time updates.

Future Directions in Model Monitoring

Future directions in model monitoring may include advanced techniques such as machine learning to detect anomalies and changes in model behaviour. This may include using unsupervised learning techniques to detect abnormal patterns in the data or using reinforcement learning to learn how to adapt to changing conditions.

Another option is explainable AI, which can help stakeholders understand how models make decisions and identify potential problems.

In addition, developing more scalable and automated monitoring systems that can process large numbers of models in real-time may be among the future directions of model monitoring. This could include using cloud-based platforms and distributed computing technologies to process large amounts of data quickly and efficiently.

Another important direction for model monitoring is the development of ethical and fair AI. As more companies rely on machine learning models to make decisions, ensuring they are free of bias and discrimination is crucial. This may involve using fairness metrics and explainability techniques to identify and mitigate potential data or model biases.

Finally, future directions in model monitoring involve integrating machine learning models with other technologies such as blockchain and the Internet of Things (IoT). This enables the development of more sophisticated monitoring systems that can track the performance of models in real-time and provide insights into their behaviour.

For example, by using IoT sensors to track the performance of a machine learning model in a manufacturing plant, stakeholders can identify issues before they become serious problems and take corrective action.

Challenges in Model Monitoring

Ensuring that the model is performing well and producing accurate results is crucial. However, model monitoring can be challenging due to various reasons, including:

Data drift: Data drift occurs when the distribution of data to which the model has applied changes over time. This can lead to model performance degradation and be challenging to detect and fix.

Model drift: Model drift occurs when the model's behaviour changes over time, even when the input data remains constant. This can happen due to changes in the underlying data, changes in the business context, or changes to the model itself.

Concept drift: Concept drift occurs because of the underlying concept that the model tries to capture changes over time. For example, if a model is trained to predict fraud based on specific patterns, it may become outdated as fraudsters change their tactics.

Explainability: As models become more complex, it can become difficult to interpret the results and understand how they were produced. This makes it challenging to detect and address issues with the model.

Bias: Models can be biased if the data used to train them is biased. This can result in unfair or discriminatory outcomes, which can be challenging to detect and address.

Scalability: Monitoring many models in real time can be challenging, especially as the number of models grows.

Resource constraints: Model monitoring can be resource-intensive, requiring significant computing power and storage space.

To address these challenges, organisations must invest in robust monitoring and management systems that detect issues quickly and accurately. This involves developing automated systems for tracking performance metrics, implementing regular data checks, and investing in explainable models that are easier to interpret.

Conclusion

In summary, monitoring machine learning models is essential to ensure the accuracy and reliability of machine learning models. By monitoring the model's performance in real-time, stakeholders can identify any problems before they become serious. Best practices for monitoring machine learning models include defining metrics, establishing a baseline, monitoring input and output data, using visualisation tools, implementing alerts, and retraining the model when necessary.

Retraining machine learning models is essential for ensuring their accuracy and effectiveness over time. Organizations use various approaches, such as incremental learning, batch learning, transfer learning, fine-tuning, or a hybrid approach, to retrain their models. Organisations can ensure that their machine-learning models continue to deliver accurate results and drive business value by choosing the right or combination of approaches.

Future directions in model monitoring involve advanced techniques such as machine learning, explainable AI, and distributed computing to develop more scalable and automated monitoring systems. Developing ethical and fair AI is also critical for ensuring machine learning models are free from bias and discrimination. By leveraging these technologies and approaches, stakeholders can ensure that their machine-learning models function as intended and deliver accurate results.

By following these practices, stakeholders can ensure their machine learning models work as intended and deliver accurate results.

This article was originally published on the company blog.

Intellicy is a consultancy firm specialising in artificial intelligence solutions for organisations seeking to unlock the full potential of their data. They provide a full suite of services, from data engineering and AI consulting to comment moderation and sentiment analysis. Intellicy's team of experts work closely with clients to identify and measure key performance indicators (KPIs) that matter most to their business, ensuring that their solutions generate tangible results. They offer cross-industry expertise and an agile delivery framework that enables them to deliver results quickly and efficiently, often in weeks rather than months. Ultimately, Intellicy helps large enterprises transform their data operations and drive business growth through artificial intelligence and machine learning.