Pick the Perfect Data Model: Practical Steps for Modern Teams

A no-jargon playbook for matching star schemas, EAV, NoSQL and hybrid patterns to real-world goals.

Poorly organised data punishes every part of the business. Queries run longer, cloud invoices creep up, analysts second-guess results and machine-learning models drift because source tables keep changing. A sound data-modelling pattern—picked for the workload in front of you, not for fashion—cuts through that waste. It lets product teams roll out new features without rewiring dashboards, supports real-time use–cases and keeps auditors happy when they ask, “Where did this number come from?”

This guide lays out a practical path to choose (and combine) star schemas, EAV tables, NoSQL documents and graph stores. You’ll see when each pattern shines, where it trips up and how to blend them so today’s quick win doesn’t become tomorrow’s bottleneck. Whether you’re refactoring a monolith or planning a green-field platform, the steps below will help you match structure to scale—backed by lessons Intellicy applies every day with clients across Australia and beyond.

When Model Choice Matters

Kicking off a brand-new data platform is the obvious moment to lock in the right structure, but it’s not the only one. If the organisation is stepping up from overnight batch reports to customer-facing, real-time services, the relational model that once powered static dashboards can stall under millisecond SLAs. The same is true when data scientists move from ad-hoc notebooks to production-grade machine-learning pipelines or when engineers begin streaming sensor data into digital-twin environments. Each shift raises new questions about concurrency, schema evolution, query latitude and cost. Choosing (or revising) the modelling pattern at these inflection points keeps performance predictable and prevents expensive rewrites just as momentum is building.

Four Core Patterns at a Glance

Star and Snowflake

The classic warehouse layout keeps facts in a central table and pushes descriptive data to surrounding dimensions. Analysts love it because joins are obvious, query planners thrive on straight paths, and column-oriented engines can compress repeated values. It shines in finance, sales and any other slice-and-dice BI workload where measures drive every conversation.

Entity-Attribute-Value (EAV)

When products, patients or IoT devices arrive with wildly different sets of attributes, the EAV pattern stores them in a tidy triple: entity ID, attribute name and value. The upside is near-infinite flexibility; the downside is that even a simple report can balloon into self-joins and pivot logic. Use it sparingly, reserve wide columns for high-traffic attributes, and cache pre-aggregations whenever possible.

Document & Key–Value NoSQL

JSON or BSON documents wrap everything a service needs in one package, so developers can add fields without migration scripts or governance committees. Key–value stores push the idea further, returning an entire object in a single call. Both approaches cut release cycles and scale horizontally, but they do so by relaxing strict consistency and sacrificing multi-document joins. They fit customer profiles, content feeds and other cases where speed to market outweighs perfect relational order.

Graph & Network

In fraud scoring, social feeds or supply-chain optimisation, the links between things matter as much as the things themselves. Native graph engines treat nodes and edges as first-class citizens, allowing constant-time hops across complex networks and path queries that SQL can barely express. They demand a mindset shift—embedding business rules in traversals rather than joins—but repay the effort with queries that surface hidden influencers and abnormal transaction chains in seconds.

Decision Framework

Step 1 – Match the Question to the Access Path

Start with the conversations your teams have every day.

Ad-hoc analytics (revenue by month, churn by cohort) favour predictable joins and heavy aggregation; star or snowflake layouts usually win.

Event streams and near-real-time APIs ask for single-row fetches and flexible payloads; document or key–value stores respond in micro-seconds.

Behavioural journeys (who-talked-to-whom, supply-chain hops) belong to graph engines, while highly variable entities lean toward EAV.

Step 2 – Score Complexity versus Stability

Sketch the attributes you expect to add over the next 6–18 months.

If new columns appear once a quarter, a relational schema stays tidy.

Weekly changes or A/B experimentation point to document storage, where each micro-service owns its JSON and migrations are just code deploys.

Continuous evolution plus cross-entity joins? Mix patterns: keep immutable facts in a warehouse and spill the shapeshifting bits into EAV or documents.

Step 3 – Forecast Growth and Cost

Models live inside budgets. Run back-of-the-envelope maths on:

Retention – years of history triple storage on column stores but barely dent a key–value cache that only keeps the latest state.

Index footprint – every secondary index speeds reads yet adds gigabytes; graph databases need far fewer but store extra edge metadata.

Egress & compute – BI workloads pull large result sets, so cloud-to-desktop transfer fees matter, whereas API calls stay within the VPC.

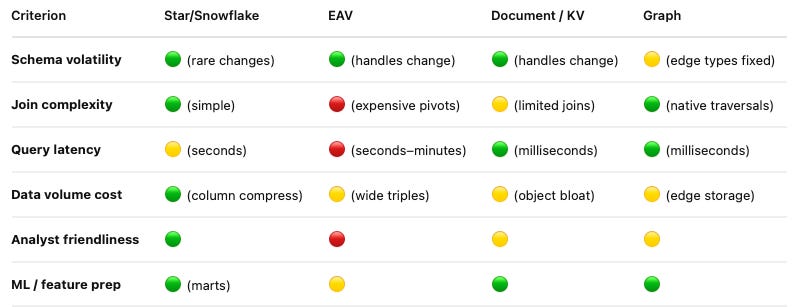

Reusable Traffic-Light Matrix

How to use it:

Mark each row against your workload.

Green = ready out-of-the-box, Yellow = workable with tuning, Red = likely pain.

The pattern (or mix) with the most green squares usually offers the lowest long-term friction.

Pattern Combinations That Work

Finance on a star, catalogue in a document store

Keep the general ledger and KPI dashboards in a classic star (or snowflake) warehouse where tight dimensions, type-2 history and columnar storage pay off. In the same stack, expose the ever-changing product catalogue through a document database—price lists, rich media and variant attributes can evolve without forcing table rebuilds. A simple data-virtualisation layer lets analysts query both worlds with one view.

Feature flags via an EAV side-car

Operational tables remain strictly typed for performance, but a small Entity-Attribute-Value table sits beside them to capture “extras” such as A/B flags, temporary promotions or partner-specific overrides. Product teams flip switches in hours, not sprints, while long-lived attributes graduate into the core schema during the next release cycle.

Graph insight driven by relational CDC

Orders, payments and customer profiles stay in Postgres or SQL Server, mastered by referential integrity. Change-data-capture streams every insert and update into a property-graph service. The graph layer surfaces “customers-who-bought-together”, detects multi-hop fraud rings and feeds real-time recommendations, all without burdening the OLTP cluster.

Governance & Tooling

Versioned schema repo – Store every DDL change in Git and run it through a migration tool such as dbt or Liquibase. Pull requests give architects and engineers a single place to review column additions, type edits or index tweaks before they reach production.

Automated lineage and PII tagging – Connect your catalogue (e.g. OpenMetadata, Azure Purview) to parse each commit, trace column-level flows and flag fields that hold personal data. Dashboards show exactly which reports, APIs or models depend on any given table.

Unit tests for model changes – Treat data structures like code. Write assertions that row counts stay within bounds, surrogate keys remain unique and reference tables never contain nulls. The CI pipeline blocks a merge if a test fails, protecting downstream dashboards and AI jobs from silent breakage.

Common Pitfalls & Quick Fixes

Over-normalising on day one – breaking tables into dozens of reference entities keeps purists happy, but it slows every join and exhausts cloud credits. Begin with a wider design that mirrors the questions analysts ask most often, then refactor only the columns that sit on your hottest paths.

Building without watching the query plan – a model that looks elegant on a whiteboard can explode into scans and temp tables once real traffic arrives. Load a month of production-sized data into a staging cluster, run representative queries, and tune indexes or denormalise columns before the first user logs in.

Assuming one model will rule them all – transaction OLTP, log analytics and machine-learning features each push storage in different directions. Accept a polyglot approach: row-store for orders, columnar star for finance, document or key-value for rapidly changing product details. The extra connector work pays for itself in performance and agility.

Choosing a data-modelling pattern isn’t a once-off technical decision—it’s a strategic lever that shapes insight speed, AI readiness and long-term cost. Matching the model to the moment gives your analysts better answers today and your engineers room to scale tomorrow.

If you’d like a second set of eyes on your current design, or a roadmap for the next phase, let’s talk.